[Image: A rose-circuit, courtesy Linköping University].

[Image: A rose-circuit, courtesy Linköping University].

In a newly published paper called “Electronic plants,” researchers from Linköping University in Sweden describe the process by which they were able to “manufacture” what they call “analog and digital organic electronic circuits and devices” inside living plants.

The plants not only conducted electrical signals, but, as Science News points, the team also “induced roses leaves to light up and change color.”

Indeed, in their way of thinking, plants have been electronic gadgets all along: “The roots, stems, leaves, and vascular circuitry of higher plants are responsible for conveying the chemical signals that regulate growth and functions. From a certain perspective, these features are analogous to the contacts, interconnections, devices, and wires of discrete and integrated electronic circuits.”

[Image: Bioluminescent foxfire mushrooms (used purely for illustrative effect), via Wikipedia].

[Image: Bioluminescent foxfire mushrooms (used purely for illustrative effect), via Wikipedia].

Here’s the process in a nutshell:

The idea of putting electronics directly into trees for the paper industry originated in the 1990s while the LOE team at Linköping University was researching printed electronics on paper. Early efforts to introduce electronics in plants were attempted by Assistant Professor Daniel Simon, leader of the LOE’s bioelectronics team, and Professor Xavier Crispin, leader of the LOE’s solid-state device team, but a lack of funding from skeptical investors halted these projects.

Thanks to independent research money from the Knut and Alice Wallenberg Foundation in 2012, Professor Berggren was able to assemble a team of researchers to reboot the project. The team tried many attempts of introducing conductive polymers through rose stems. Only one polymer, called PEDOT-S, synthesized by Dr. Roger Gabrielsson, successfully assembled itself inside the xylem channels as conducting wires, while still allowing the transport of water and nutrients. Dr. Eleni Stavrinidou used the material to create long (10 cm) wires in the xylem channels of the rose. By combining the wires with the electrolyte that surrounds these channels she was able to create an electrochemical transistor, a transistor that converts ionic signals to electronic output. Using the xylem transistors she also demonstrated digital logic gate function.

Headily enough, using plantlife as a logic gate also implies a future computational use of vegetation: living supercomputers producing their own circuits inside dual-use stems.

Previously, we have looked at the use of electricity to stimulate plants into producing certain chemicals, how the action of plant roots growing through soil could be tapped as a future source of power, and how soil bacteria could be wired up into huge, living battery fields—in fact, we also looked at a tongue-in-cheek design project for “growing electrical circuitry inside the trunks of living trees“—but this actually turns vegetation into a form of living circuitry.

While Archigram’s “Logplug” project is an obvious reference point here within the world of architectural design, it seems more interesting to consider instead the future landscape design implications of technological advances such as this—how “electronic plants” might affect everything from forestry to home gardening, energy production and distribution infrastructure to a city’s lighting grid.

[Image: The “Logplug” by Archigram, from Archigram].

[Image: The “Logplug” by Archigram, from Archigram].

We looked at this latter possibility several few years ago, in fact, in a post from 2009 called “The Bioluminescent Metropolis,” where the first comment now seems both prescient and somewhat sad given later developments.

But the possibilities here go beyond mere bioluminescence, into someday fully functioning electronic vegetation.

Plants could be used as interactive displays—recall the roses “induced… to light up and change color”—as well as given larger conductive roles in a region’s electrical grid. Imagine storing excess electricity from a solar power plant inside shining rose gardens, or the ability to bypass fallen power lines after a thunderstorm by re-routing a town’s electrical supply through the landscape itself, living corridors wired from within by self-assembling circuits and transistors.

And, of course, that’s all in addition to the possibility of cultivating plants specifically for their use as manufacturing systems for organic electronics—for example, cracking them open not to reveal nuts, seeds, or other consumable protein, but the flexible circuits of living computer networks. BioRAM.

There are obvious reasons to hesitate before realizing such a vision—that is, before charging headlong into a future world where forests are treated merely as back-up lighting plans for overcrowded cities and plants of every kind are seen as nothing but wildlife-disrupting sources of light cultivated for the throwaway value of human aesthetic pleasure.

Nonetheless, thinking through the design possibilities in addition to the ethical risks not only now seems very necessary, but might also lead someplace truly extraordinary—or someplace otherworldly, we might say with no need for justification.

For now, check out the original research paper over at Science Advances.

[Image: An otherwise unrelated seed x-ray from the Bulkley Valley Research Centre].

[Image: An otherwise unrelated seed x-ray from the Bulkley Valley Research Centre]. [Image: “Higashiyama III” (1989) by Kozo Miyoshi, courtesy University of Arizona Center for Creative Photography; via but does it float].

[Image: “Higashiyama III” (1989) by Kozo Miyoshi, courtesy University of Arizona Center for Creative Photography; via but does it float]. [Image:

[Image:

[Image: Unrelated image of incredible floral shapes 3D-printed by

[Image: Unrelated image of incredible floral shapes 3D-printed by  [Image: An also unrelated project called “

[Image: An also unrelated project called “ [Image: “

[Image: “ [Image: Spot the model; from Jessica Rosenkrantz’s

[Image: Spot the model; from Jessica Rosenkrantz’s  [Image: “

[Image: “ [Image: “

[Image: “ [Image: “

[Image: “ [Image: One of Google’ Loon balloons; screen grab from

[Image: One of Google’ Loon balloons; screen grab from

[Image: A rose-circuit, courtesy Linköping University].

[Image: A rose-circuit, courtesy Linköping University]. [Image: Bioluminescent foxfire mushrooms (used purely for illustrative effect), via

[Image: Bioluminescent foxfire mushrooms (used purely for illustrative effect), via  [Image: The “Logplug” by Archigram, from

[Image: The “Logplug” by Archigram, from

[Image: By

[Image: By  [Image: By

[Image: By  [Image: By

[Image: By  [Image: Via

[Image: Via  [Image: Via

[Image: Via  [Image: Via

[Image: Via

[Image: From Pierre Huyghe, “

[Image: From Pierre Huyghe, “

[Image: Micromotors at work, via

[Image: Micromotors at work, via  [Image: A Maltese limestone quarry, via

[Image: A Maltese limestone quarry, via

[Image: “Riggers install a lightning rod” atop the Empire State Building “in preparation for an investigation into lightning by scientists of the General Electric Company” (1947), via the

[Image: “Riggers install a lightning rod” atop the Empire State Building “in preparation for an investigation into lightning by scientists of the General Electric Company” (1947), via the  [Image: Lightning storm over Boston; via

[Image: Lightning storm over Boston; via  [Image: Lightning via

[Image: Lightning via

[Image: Snow-making equipment via

[Image: Snow-making equipment via  [Image: Snow-making equipment via

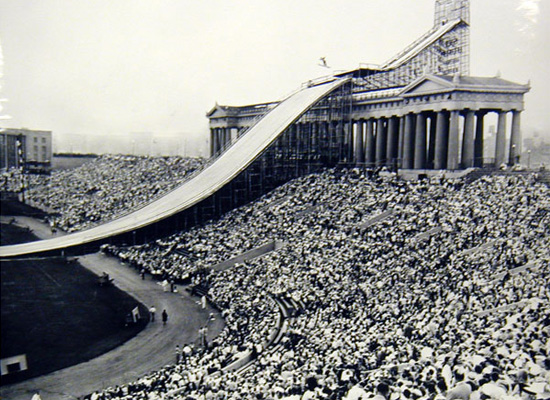

[Image: Snow-making equipment via  [Image: Ski jumping in summer at Chicago’s Soldier Field (1954); via

[Image: Ski jumping in summer at Chicago’s Soldier Field (1954); via  [Image: Making snow for It’s A Wonderful Life, via

[Image: Making snow for It’s A Wonderful Life, via