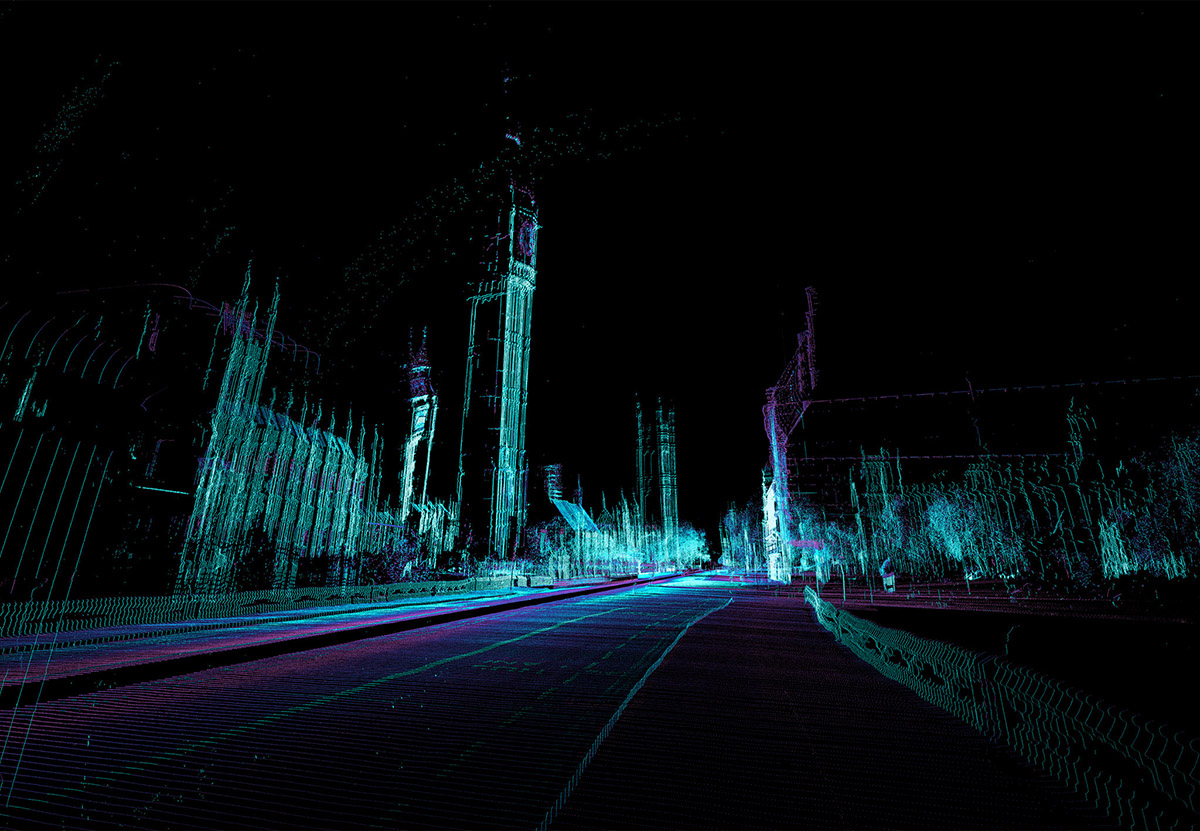

[Image: Screen-grab from an interview between John Peel and Aphex Twin, filmed in Cornwall’s Gwennap Pit; spotted via Xenogothic].

[Image: Screen-grab from an interview between John Peel and Aphex Twin, filmed in Cornwall’s Gwennap Pit; spotted via Xenogothic].

An anecdote I often use while teaching design classes—but also something I first read so long ago, I might actually be making the whole thing up—comes from an old interview with Richard D. James, aka Aphex Twin. I’ve tried some very, very lazy Googling to find the original source, but, frankly, I like the version I remember so much that I’m not really concerned with verifying its details.

In any case, the story goes like this: in an interview with a music magazine, published I believe some time in the late-1990s, James claimed that he had been hired to remix a track by—if I remember correctly—The Afghan Whigs. Whether or not it was The Afghan Whigs, the point was that James reported being so unable to come up with new ideas for the band’s music that he simply sped their original song up to the length of a high-hat, then composed a new track of his own using that sound.

The upshot is that, if you were to slow down the resulting Aphex Twin track by several orders of magnitude, you would hear an Afghan Whigs song (or whatever) playing, in its entirety, every four or five minutes, bursting surreally out of the electronic blur before falling silent again, like a tide. Just cycling away, over and over again.

What’s amazing about this, at least for me, is in the possibilities it implies for everything from sonic camouflage—such as hiding acoustic information inside a mere beep in the overall background sound of a room—to art installations.

Imagine a scenario, for example, in which every little bleep and bloop in a song (or TV commercial or blockbuster film or ringtone) somewhere is actually an entire other song accelerated, or even what this could do outside the field of acoustics altogether. An entire film, for example, sped up to a brief flash of light: you film the flash, slow down the resulting footage, and you’ve got 2001 playing in a public space, in full, hours compressed into a microsecond. It’s like the exact opposite of Bryan Boyer’s Very Slow Movie Player, with very fast nano-cinemas hidden in plain sight.

The world of sampling litigation has been widely covered—in which predatory legal teams exhaustively listen to new musical releases, flagging unauthorized uses of sampled material—but, for this, it’s like you’d need time cops, temporal attorneys slowing things down dramatically out of some weird fear that their client’s music has been used as a high-hat sound…

Anyway, for context, think of the inaudible commands used to trigger Internet-of-Things devices: “The ultrasonic pitches are embedded into TV commercials or are played when a user encounters an ad displayed in a computer browser,” Ars Technica reported back in 2015. “While the sound can’t be heard by the human ear, nearby tablets and smartphones can detect it. When they do, browser cookies can now pair a single user to multiple devices and keep track of what TV commercials the person sees, how long the person watches the ads, and whether the person acts on the ads by doing a Web search or buying a product.”

Or, as the New York Times wrote in 2018, “researchers in China and the United States have begun demonstrating that they can send hidden commands that are undetectable to the human ear to Apple’s Siri, Amazon’s Alexa and Google’s Assistant. Inside university labs, the researchers have been able to secretly activate the artificial intelligence systems on smartphones and smart speakers, making them dial phone numbers or open websites. In the wrong hands, the technology could be used to unlock doors, wire money or buy stuff online—simply with music playing over the radio.”

Now imagine some malevolent Aphex Twin doing audio-engineering work for a London advertising firm—or for the intelligence services of an adversarial nation-state—embedding ultra-fast sonic triggers in the audio environment. Only, here, it would actually be some weird dystopia in which the Internet of Things is secretly run by ubiquitous Afghan Whigs songs being played at 3,600-times their intended speed.

[Don’t miss Marc Weidenbaum’s book on Aphex Twin’s Selected Ambient Works Vol. 2.]

[Image: One of Google’ Loon balloons; screen grab from

[Image: One of Google’ Loon balloons; screen grab from

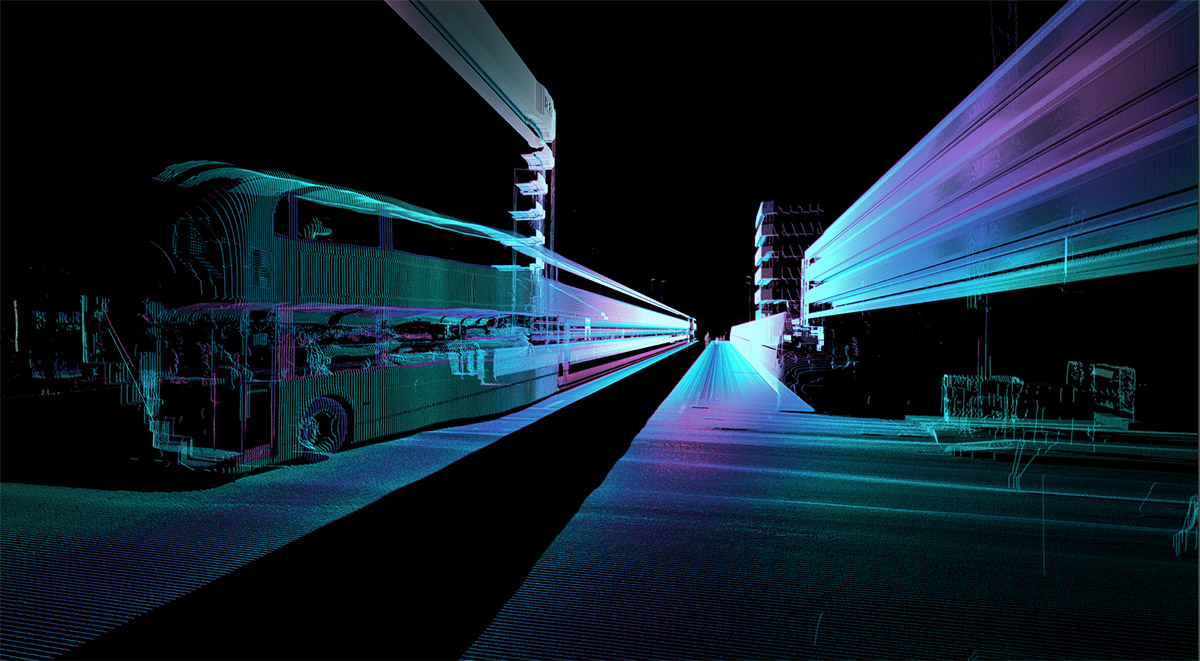

[Image by

[Image by  [Image by

[Image by