[Image: A diagram of the accident site, via the Florida Highway Patrol].

One of the most remarkable details of last week’s fatal collision, involving a tractor trailer and a Tesla electric car operating in self-driving mode, was the fact that the car apparently mistook the side of the truck for the sky.

As Tesla explained in a public statement following the accidental death, the car’s autopilot was unable to see “the white side of the tractor trailer against a brightly lit sky”—which is to say, it was unable to differentiate the two.

The truck was not seen as a discrete object, in other words, but as something indistinguishable from the larger spatial environment. It was more like an elision.

Examples like this are tragic, to be sure, but they are also technologically interesting, in that they give momentary glimpses of where robotic perception has failed. Hidden within this, then, are lessons not just for how vehicle designers and computers scientists alike could make sure this never happens again, but also precisely the opposite: how we could design spatial environments deliberately to deceive, misdirect, or otherwise baffle these sorts of semi-autonomous machines.

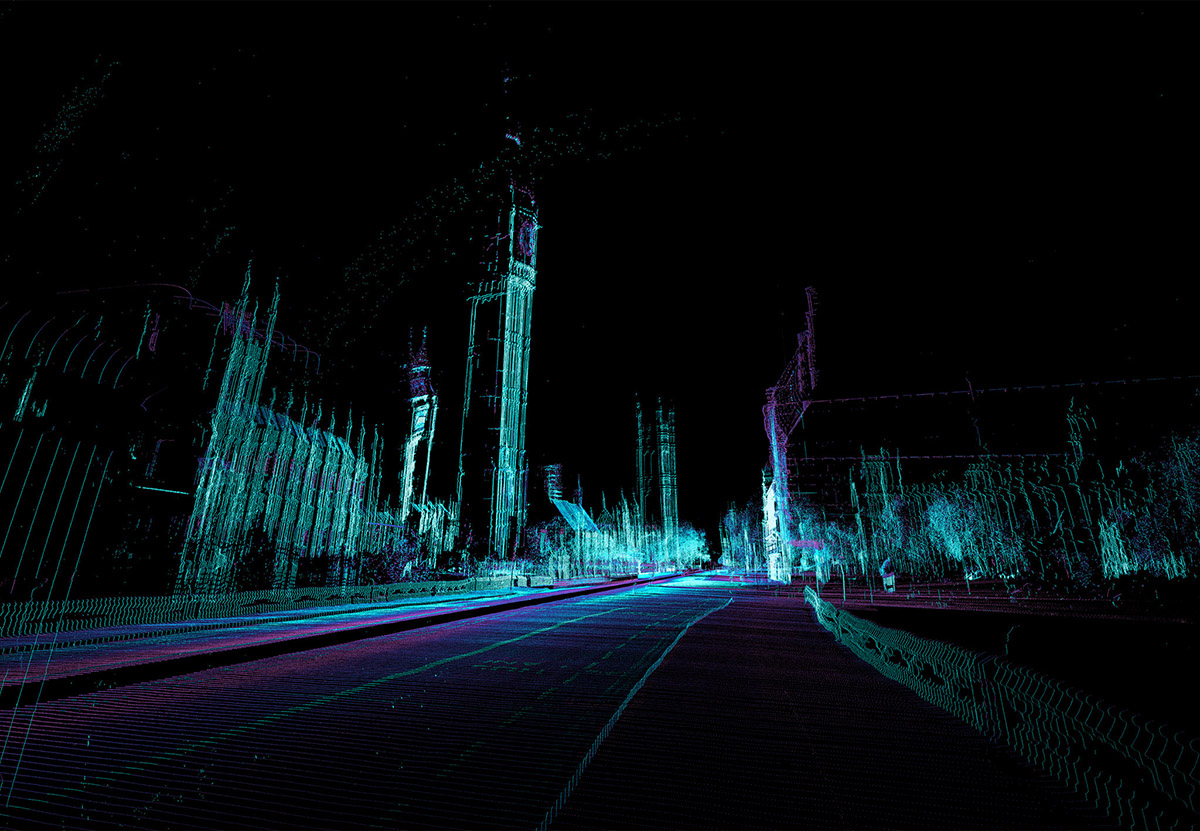

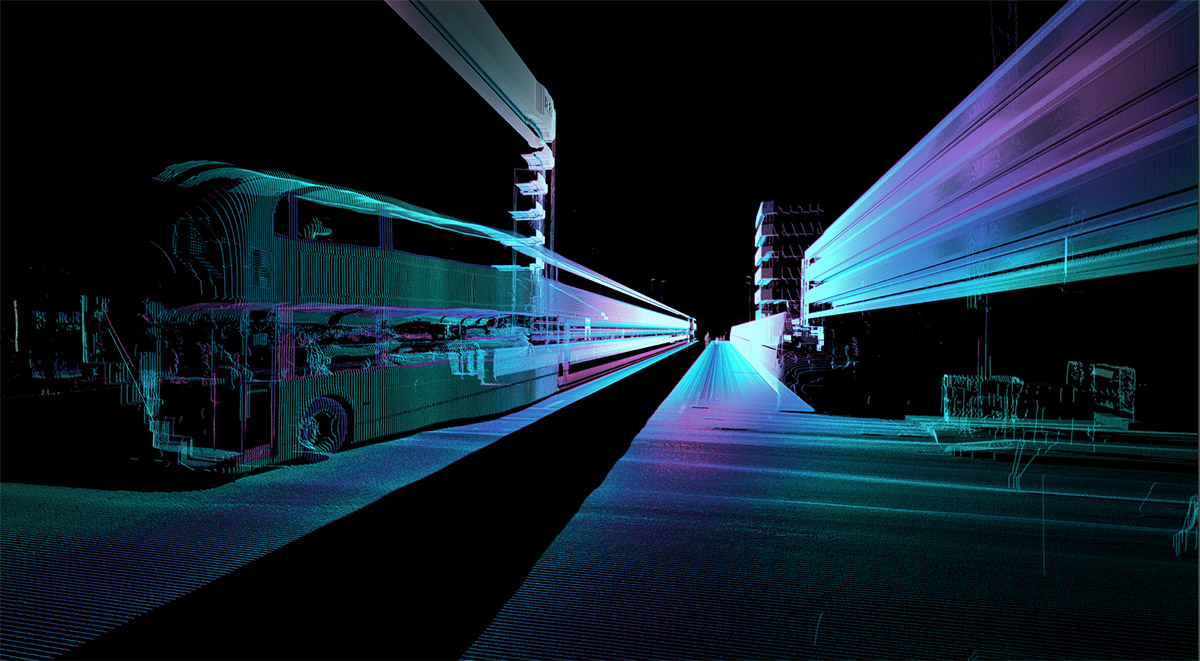

For all the talk of a “robot-readable world,” in other words, it is interesting to consider a world made deliberately illegible to robots, with materials used for throwing off 3D cameras or LiDAR, either through excess reflectivity or unexpected light-absorption.

Last summer, in a piece for New Scientist, I interviewed a robotics researcher named John Rogers, at Georgia Tech. Rogers pointed out that the perceptual needs of robots will have more and more of an effect on how architectural interiors are designed and built in the first place. Quoting that article at length:

In a detail that has implications beyond domestic healthcare, Rogers also discovered that some interiors confound robots altogether. Corridors that are lined with rubber sheeting to protect against damage from wayward robots—such as those in his lab—proved almost impossible to navigate. Why? Rubber absorbs light and prevents laser-based navigational systems from relaying spatial information back to the robot.

Mirrors and other reflective materials also threw off his robots’ ability to navigate. “It actually appeared that there was a virtual world beyond the mirror,” says Rogers. The illusion made his robots act as if there were a labyrinth of new rooms waiting to be entered and explored. When reflections from your kitchen tiles risk disrupting a robot’s navigational system, it might be time to rethink the very purpose of interior design.

I mention all this for at least two reasons.

1) It is obvious by now that the American highway system, as well as all of the vehicles that will be permitted to travel on it, will be remade as one of the first pieces of truly robot-legible public infrastructure. It will transition from being a “dumb” system of non-interactive 2D surfaces to become an immersive spatial environment filled with volumetric sign-systems meant for non-human readers. It will be rebuilt for perceptual systems other than our own.

2) Finding ways to throw-off self-driving robots will be more than just a harmless prank or even a serious violation of public safety; it will become part of a much larger arsenal for self-defense during war. In other words, consider the points raised by John Rogers, above, but in a new context: you live in a city under attack by a foreign military whose use of semi-autonomous machines requires defensive means other than—or in addition to—kinetic firepower. Wheeled and aerial robots alike have been deployed.

One possible line of defense—among many, of course—would be to redesign your city, even down to the interior of your own home, such that machine vision is constantly confused there. You thus rebuild the world using light-absorbing fabrics and reflective ornament, installing projections and mirrors, screens and smoke. Or “stealth objects” and radar-baffling architectural geometries. A military robot wheeling its way into your home thus simply gets lost there, stuck in a labyrinth of perceptual convolution and reflection-implied rooms that don’t exist.

In any case, I suppose the question is: if, today, a truck can blend-in with the Florida sky, and thus fatally disable a self-driving machine, what might we learn from this event in terms of how to deliberately confuse robotic military systems of the future?

We had so-called “dazzle ships” in World War I, for example, and the design of perceptually baffling military camouflage continues to undergo innovation today; but what is anti-robot architectural design, or anti-robot urban planning, and how could it be strategically deployed as a defensive tactic in war?

[Image by

[Image by  [Image by

[Image by

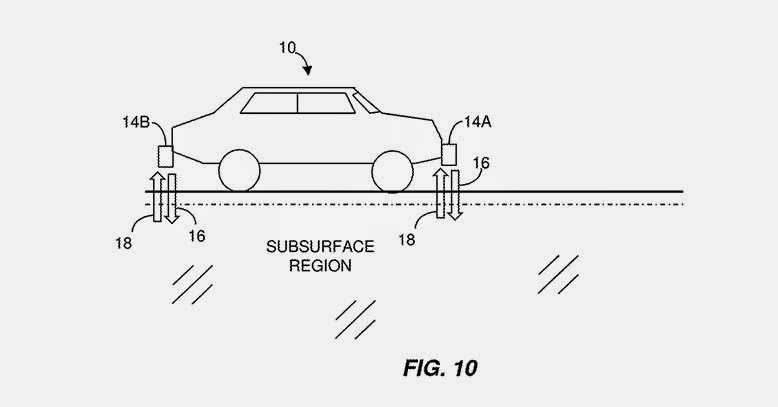

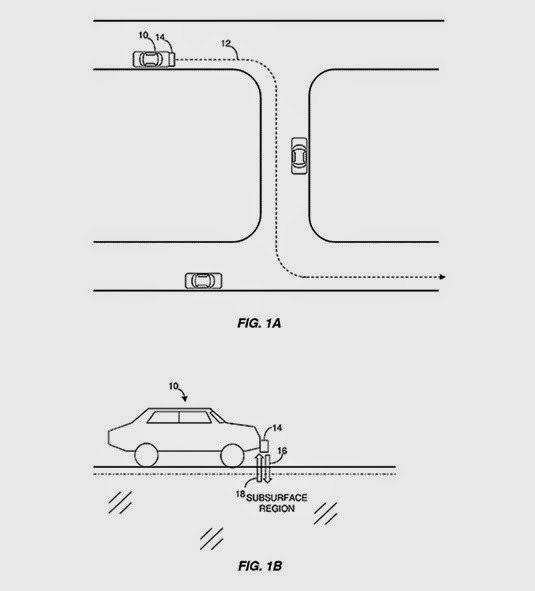

[Image: From a patent filed by MIT, courtesy

[Image: From a patent filed by MIT, courtesy  [Image: From a patent filed by MIT, courtesy

[Image: From a patent filed by MIT, courtesy  [Image: From a patent filed by MIT, courtesy

[Image: From a patent filed by MIT, courtesy