[Image by ScanLAB Projects for The New York Times Magazine].

[Image by ScanLAB Projects for The New York Times Magazine].

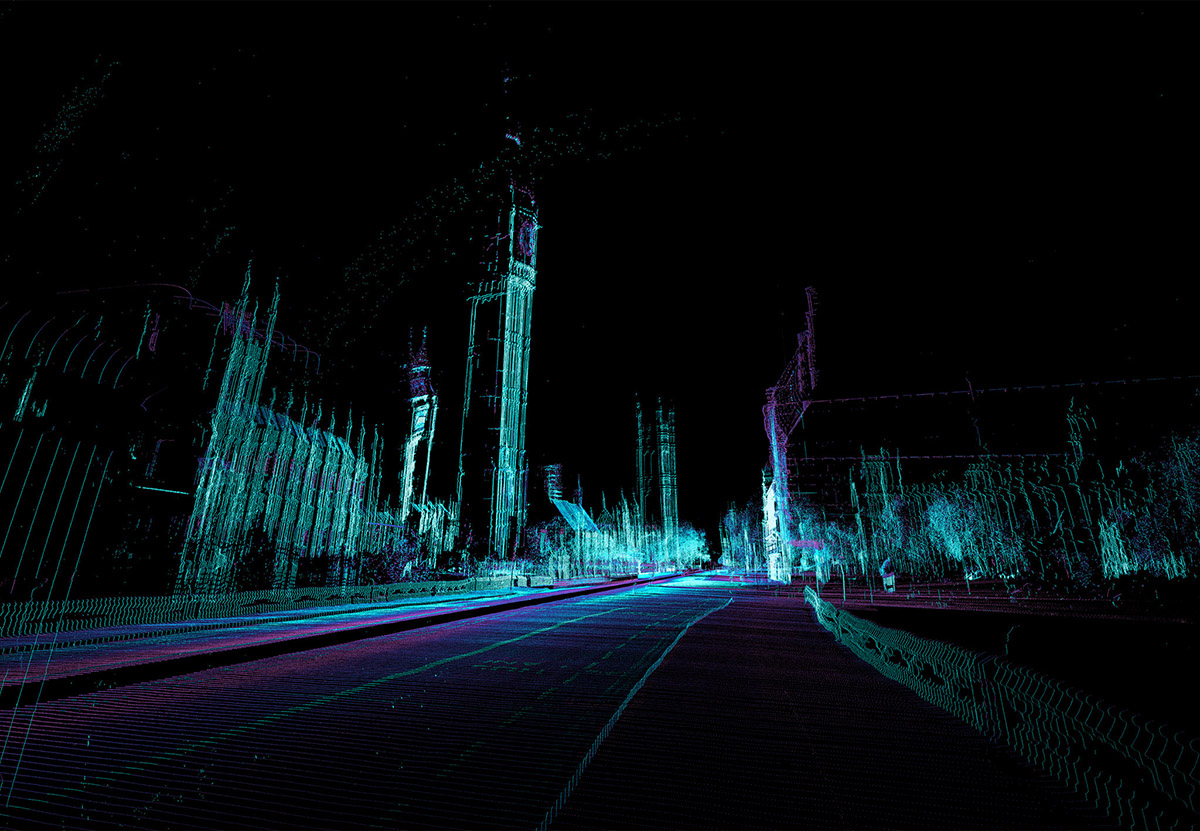

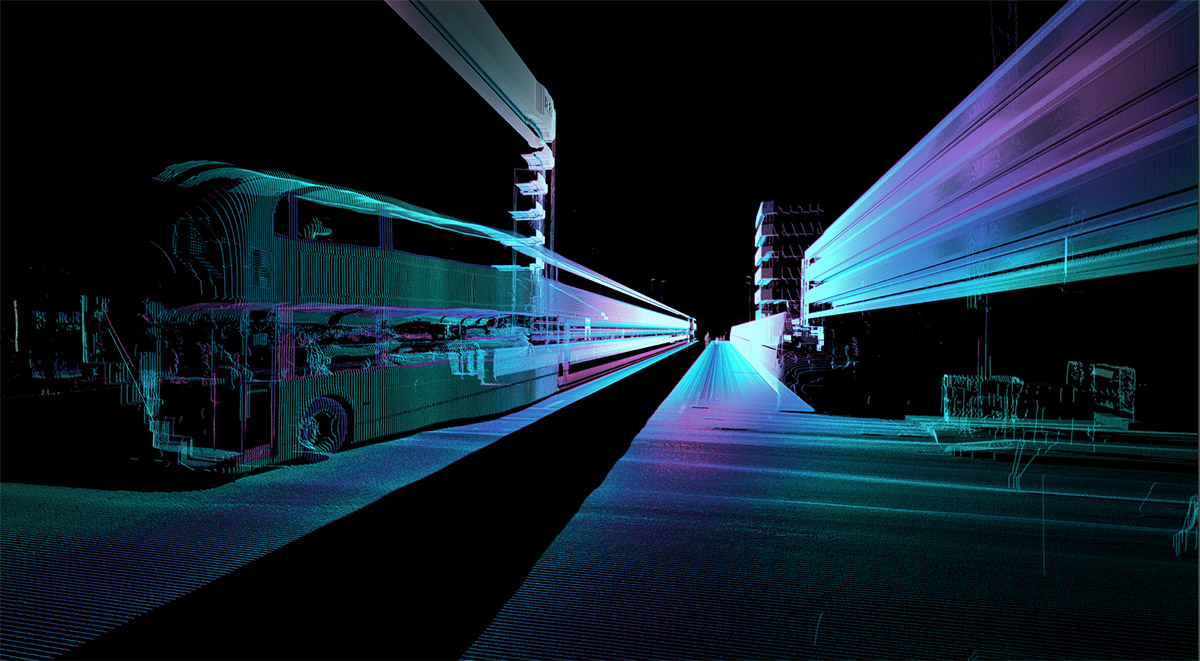

Understanding how driverless cars see the world also means understanding how they mis-see things: the duplications, glitches, and scanning errors that, precisely because of their deviation from human perception, suggest new ways of interacting with and experiencing the built environment.

Stepping into or through the scanners of autonomous vehicles in order to look back at the world from their perspective is the premise of a short feature I’ve written for this weekend’s edition of The New York Times Magazine.

For a new series of urban images, Matt Shaw and Will Trossell of ScanLAB Projects tuned, tweaked, and augmented a LiDAR unit—one of the many tools used by self-driving vehicles to navigate—and turned it instead into something of an artistic device for experimentally representing urban space.

The resulting shots show the streets, bridges, and landmarks of London transformed through glitches into “a landscape of aging monuments and ornate buildings, but also one haunted by duplications and digital ghosts”:

The city’s double-decker buses, scanned over and over again, become time-stretched into featureless mega-structures blocking whole streets at a time. Other buildings seem to repeat and stutter, a riot of Houses of Parliament jostling shoulder to shoulder with themselves in the distance. Workers setting out for a lunchtime stroll become spectral silhouettes popping up as aberrations on the edge of the image. Glass towers unravel into the sky like smoke. Trossell calls these “mad machine hallucinations,” as if he and Shaw had woken up some sort of Frankenstein’s monster asleep inside the automotive industry’s most advanced imaging technology.

Along the way I had the pleasure of speaking to Illah Nourbakhsh, a professor of robotics at Carnegie Mellon and the author of Robot Futures, a book I previously featured here on the blog back in 2013. Nourbakhsh is impressively adept at generating potential narrative scenarios—speculative accidents, we might call them—in which technology might fail or be compromised, and his take on the various perceptual risks or interpretive short-comings posed by autonomous vehicle technology was fascinating.

[Image by ScanLAB Projects for The New York Times Magazine].

[Image by ScanLAB Projects for The New York Times Magazine].

Alas, only one example from our long conversation made it into the final article, but it is worth repeating. Nourbakhsh used “the metaphor of the perfect storm to describe an event so strange that no amount of programming or image-recognition technology can be expected to understand it”:

Imagine someone wearing a T-shirt with a STOP sign printed on it, he told me. “If they’re outside walking, and the sun is at just the right glare level, and there’s a mirrored truck stopped next to you, and the sun bounces off that truck and hits the guy so that you can’t see his face anymore—well, now your car just sees a stop sign. The chances of all that happening are diminishingly small—it’s very, very unlikely—but the problem is we will have millions of these cars. The very unlikely will happen all the time.”

The most interesting takeaway from this sort of scenario, however, is not that the technology is inherently flawed or limited, but that these momentary mirages and optical illusions are not, in fact, ephemeral: in a very straightforward, functional sense, they become a physical feature of the urban landscape because they exert spatial influences on the machines that (mis-)perceive them.

Nourbakhsh’s STOP sign might not “actually” be there—but it is actually there if it causes a self-driving car to stop.

Immaterial effects of machine vision become digitally material landmarks in the city, affecting traffic and influencing how machines safely operate. But, crucially, these are landmarks that remain invisible to human beings—and it is ScanLAB’s ultimate representational goal here to explore what it means to visualize them.

While, in the piece, I compare ScanLAB’s work to the heyday of European Romanticism—that ScanLAB are, in effect, documenting an encounter with sublime and inhuman landscapes that, here, are not remote mountain peaks but the engineered products of computation—writer Asher Kohn suggested on Twitter that, rather, it should be considered “Italian futurism made real,” with sweeping scenes of streets and buildings unraveling into space like digital smoke. It’s a great comparison, and worth developing at greater length.

For now, check out the full piece over at The New York Times Magazine: “The Dream Life of Driverless Cars.”

Fascinating! This reminded me of a few articles that I have recently read about the soon-to-be operational F-35 stealth fighter jet. While these will not be pilotless, they incorporate technology which enables the pilot to “see” in a complete 360-degree sphere, including what is behind, above and beneath them, through the visor of their helmets, or, more precisely, a representation of what the jet (which is virtually electronically invisible itself) “sees“ through its vast array of sensors. How these images actually appear to the pilot is classified, so we can only conjecture on how they might be rendered for the pilot. While the vastly more complex systems of the F-35 offer even more possibilities to consider about how it electronically perceives and misperceives the world than a driverless car, it is, perhaps, even more intriguing to consider how, after countless hours in the cockpit, this fusion of the plane’s 360-degree vision might directly alter the pilots perception of the world and produce an unprecedented, orb-like dreamscape for the pilot.