[Image: Self-portrait on Mars; via NASA].

[Image: Self-portrait on Mars; via NASA].

Science has published a short profile of a woman named Vandi Verma. She is “one of the few people in the world who is qualified to drive a vehicle on Mars.”

Vera has driven a series of remote vehicles on another planet over the years, including, most recently, the Curiosity rover.

[Image: Another self-portrait on Mars; via NASA].

[Image: Another self-portrait on Mars; via NASA].

Driving it involves a strange sequence of simulations, projections, and virtual maps that are eventually beamed out from planet to planet, the robot at the other end acting like a kind of wheeled marionette as it then spins forward along its new route. Here is a long description of the process from Science:

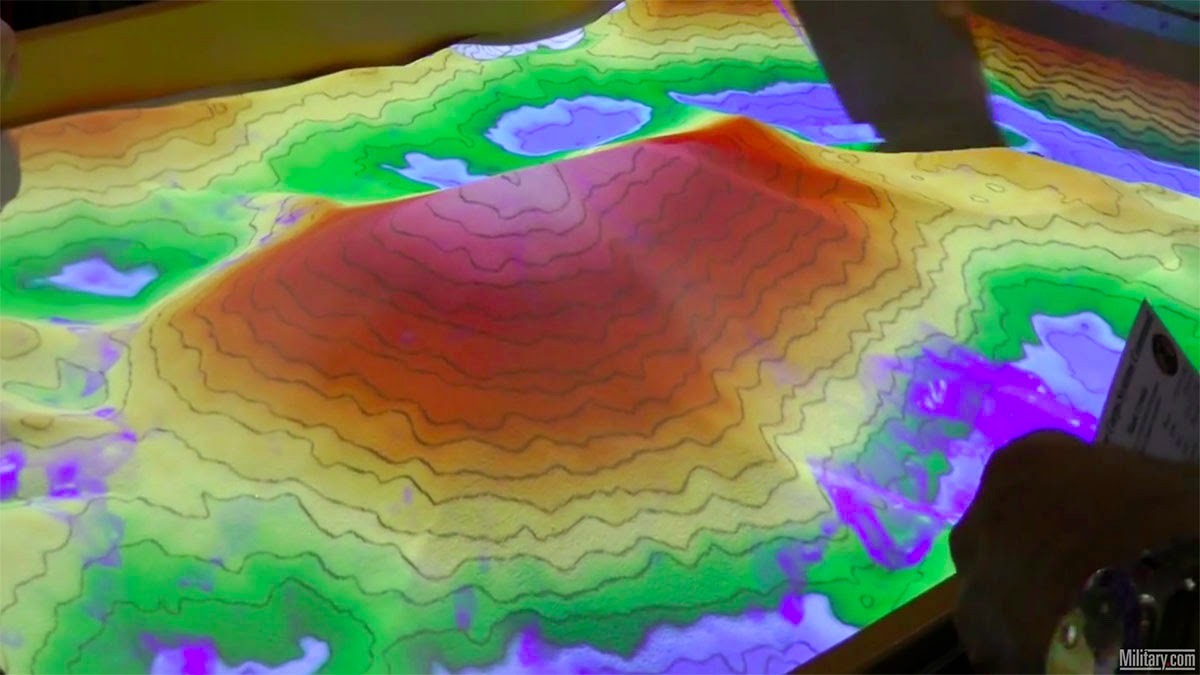

Each day, before the rover shuts down for the frigid martian night, it calls home, Verma says. Besides relaying scientific data and images it gathered during the day, it sends its precise coordinates. They are downloaded into simulation software Verma helped write. The software helps drivers plan the rover’s route for the next day, simulating tricky maneuvers. Operators may even perform a dry run with a duplicate rover on a sandy replica of the planet’s surface in JPL’s Mars Yard. Then the full day’s itinerary is beamed to the rover so that it can set off purposefully each dawn.

What’s interesting here is not just the notion of an interplanetary driver’s license—a qualification that allows one to control wheeled machines on other planets—but the fact that there is still such a clear human focus at the center of the control process.

The fact that Science‘s profile of Verma begins with her driving agricultural equipment on her family farm in India, an experience that quite rapidly scaled up to the point of guiding rovers across the surface of another world entirely, only reinforces the sense of surprise here—that farm equipment in India and NASA’s Mars rover program bear technical similarities.

They are, in a sense, interplanetary cousins, simultaneously conjoined and air-gapped across two worlds..

[Image: A glimpse of the dreaming; photo by Alexis Madrigal, courtesy of The Atlantic].

[Image: A glimpse of the dreaming; photo by Alexis Madrigal, courtesy of The Atlantic].

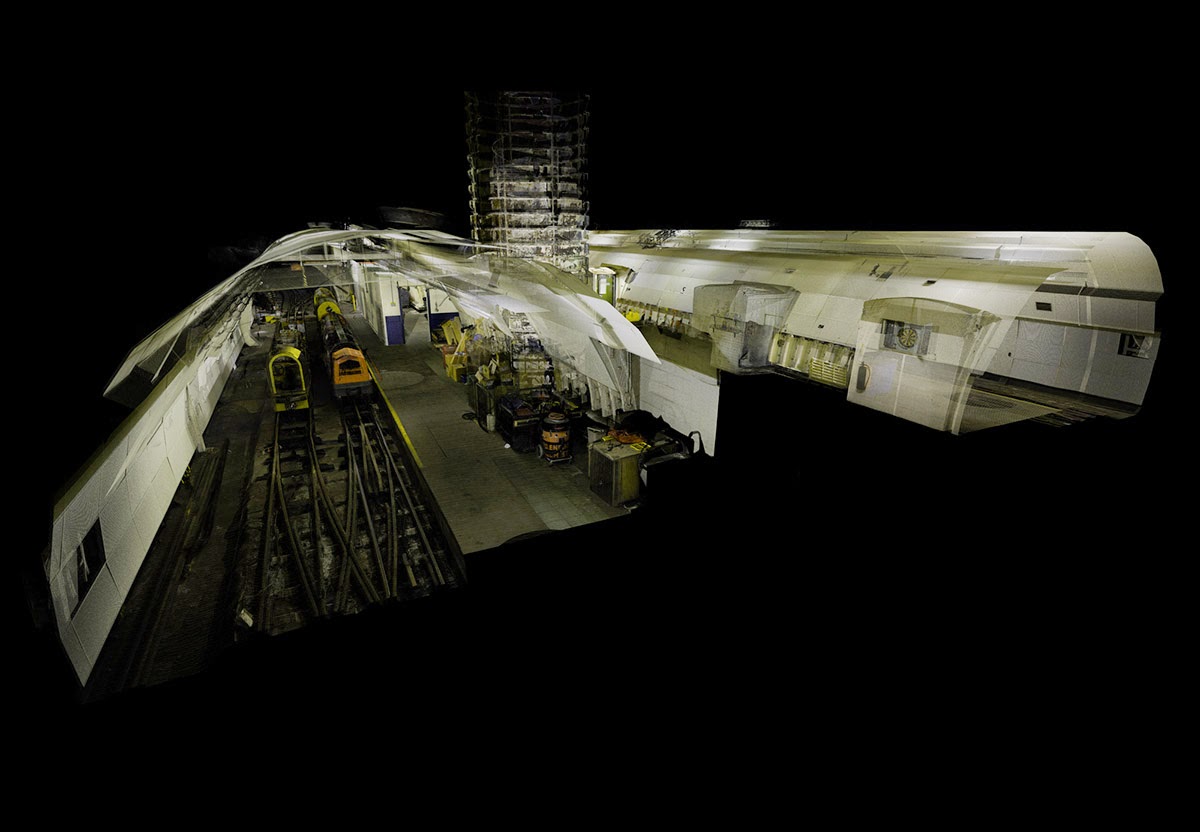

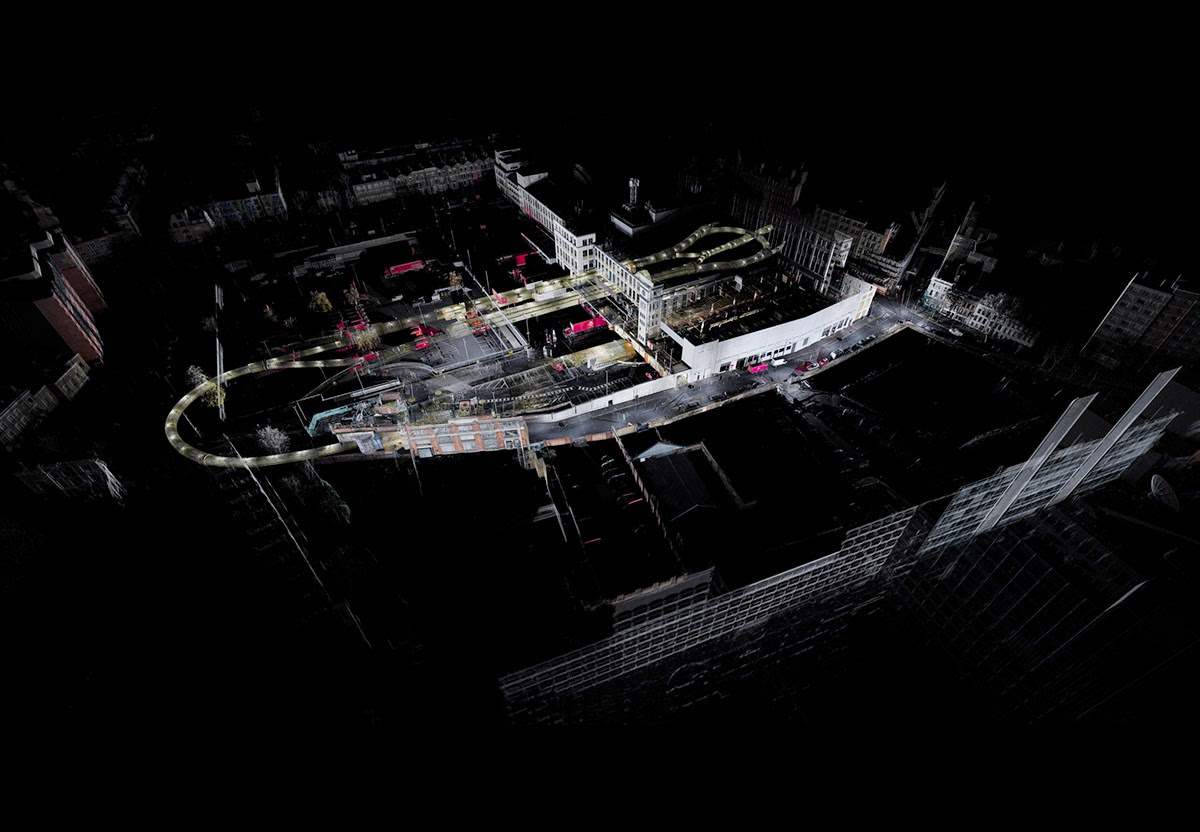

Compare this to the complex process of programming and manufacturing a driverless vehicle. In an interesting piece published last summer, Alexis Madrigal explained that Google’s self-driving cars operate inside a Borgesian 1:1 map of the physical world, a “virtual track” coextensive with the landscape you and I stand upon and inhabit.

“Google has created a virtual world out of the streets their engineers have driven,” Madrigal writes. And, like the Mars rover program we just read about, “They pre-load the data for the route into the car’s memory before it sets off, so that as it drives, the software knows what to expect.”

The software knows what to expect because the vehicle, in a sense, is not really driving on the streets outside Google’s Mountain View campus; it is driving in a seamlessly parallel simulation of those streets, never leaving the world of the map so precisely programmed into its software.

Like Christopher Walken’s character in the 1983 film Brainstorm, Google’s self-driving cars are operating inside a topographical dream state, we might say, seeing only what the headpiece allows them to see.

[Image: Navigating dreams within dreams: (top) from Brainstorm; (bottom) a Google self-driving car, via Google and re:form].

[Image: Navigating dreams within dreams: (top) from Brainstorm; (bottom) a Google self-driving car, via Google and re:form].

Briefly, recall a recent essay by Karen Levy and Tim Hwang called “Back Stage at the Machine Theater.” That piece looked at the atavistic holdover of old control technologies—such as steering wheels—in vehicles that are actually computer-controlled.

There is no need for a human-manipulated steering wheel, in other words, other than to offer a psychological point of focus for the vehicle’s passengers, to give them the feeling that they can still take over.

This is the “machine theater” that the title of their essay refers to: a dramaturgy made entirely of technical interfaces that deliberately produce a misleading illusion of human control. These interfaces are “placebo buttons,” they write, that transform all but autonomous technical systems into “theaters of volition” that still appear to be under manual guidance.

I mention this essay here because the Science piece with which this post began also explains that NASA’s rover program is being pushed toward a state of greater autonomy.

“One of Verma’s key research goals,” we read, “has been to give rovers greater autonomy to decide on a course of action. She is now working on a software upgrade that will let Curiosity be true to its name. It will allow the rover to autonomously select interesting rocks, stopping in the middle of a long drive to take high-resolution images or analyze a rock with its laser, without any prompting from Earth.”

[Image: Volitional portraiture on Mars; via NASA].

[Image: Volitional portraiture on Mars; via NASA].

The implication here is that, as the Mars rover program becomes “self-driving,” it will also be transformed into a vast “theater of volition,” in Levy’s and Hwang’s formulation: that Earth-bound “drivers” might soon find themselves reporting to work simply to flip placebo levers and push placebo buttons as these vehicles go about their own business far away.

It will become more ritual than science, more icon than instrument—a strangely passive experience, watching a distant machine navigate simulated terrain models and software packages coextensive with the surface of Mars.

[Image: The Very Low Frequency antenna field at Cutler, Maine, a facility for communicating with at-sea submarine crews].

[Image: The Very Low Frequency antenna field at Cutler, Maine, a facility for communicating with at-sea submarine crews]. [Image: An unmanned underwater vehicle; U.S. Navy photo by S. L. Standifird].

[Image: An unmanned underwater vehicle; U.S. Navy photo by S. L. Standifird]. [Image: The

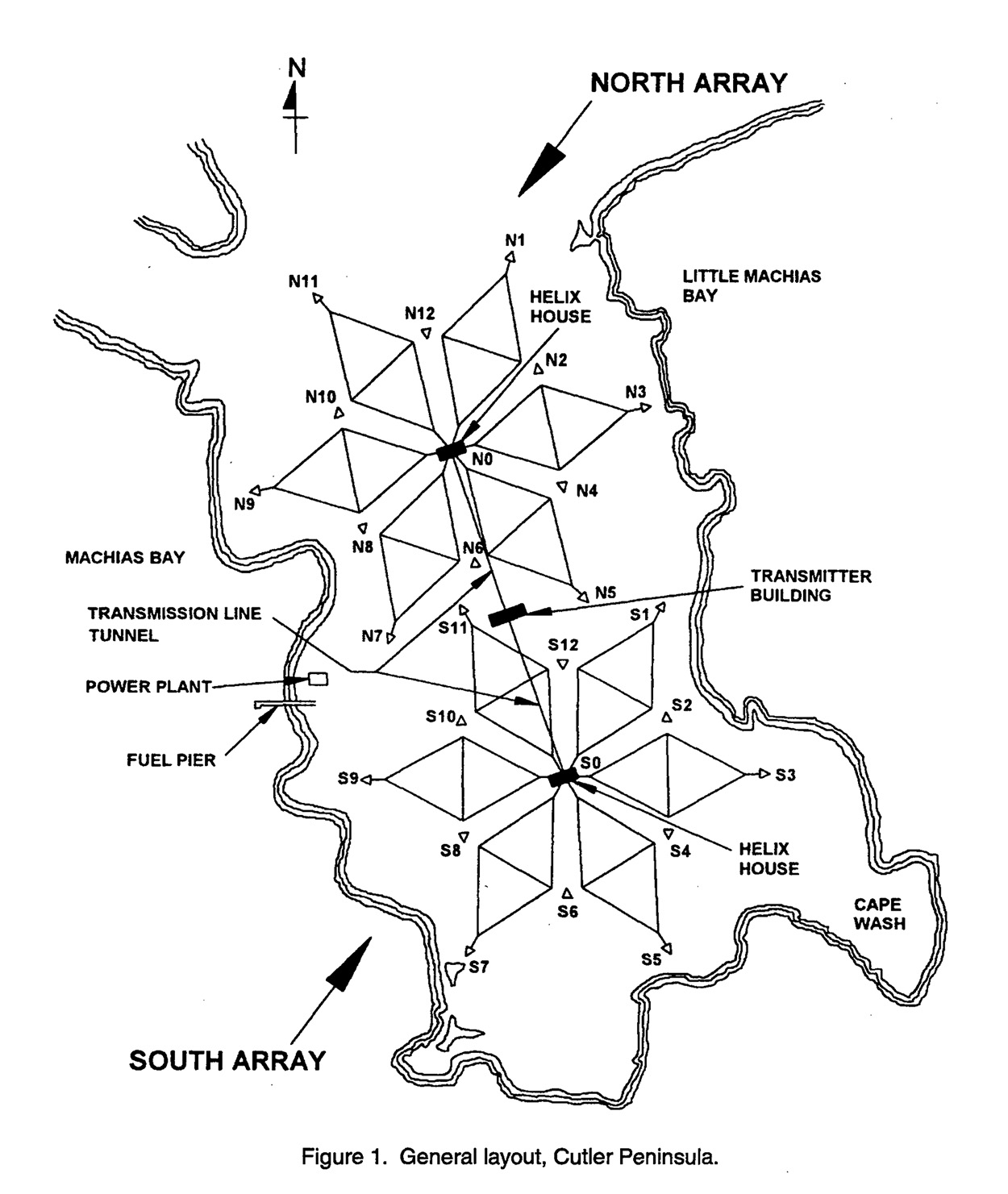

[Image: The  So what does this thing do? “The Navy’s very-low-frequency (VLF) station at Cutler, Maine, provides communication to the United States strategic submarine forces,” a January 1998 white paper called “Technical Report 1761” explains. It is basically an east coast version of the so-called

So what does this thing do? “The Navy’s very-low-frequency (VLF) station at Cutler, Maine, provides communication to the United States strategic submarine forces,” a January 1998 white paper called “Technical Report 1761” explains. It is basically an east coast version of the so-called  [Image: A diagram of the antennas, from the aforementioned January 1998 research paper].

[Image: A diagram of the antennas, from the aforementioned January 1998 research paper]. [Image: Courtesy of

[Image: Courtesy of

[Image: By

[Image: By  [Image: By

[Image: By  [Image: By

[Image: By  [Image: By

[Image: By  [Image: By

[Image: By  [Image: By

[Image: By  [Image: By

[Image: By

[Image: A view of the

[Image: A view of the  [Image: A crane so large my iPhone basically couldn’t take a picture of it; Instagram by

[Image: A crane so large my iPhone basically couldn’t take a picture of it; Instagram by  [Images: The bottom half of the same crane; Instagram by

[Images: The bottom half of the same crane; Instagram by  [Image: Waiting for the invisible hand of Auto Schwarzenegger; Instagram by

[Image: Waiting for the invisible hand of Auto Schwarzenegger; Instagram by  [Image: Out in the acreage; Instagram by

[Image: Out in the acreage; Instagram by  [Image: One of thousands of stacked walls in the infinite labyrinth of the

[Image: One of thousands of stacked walls in the infinite labyrinth of the  [Image: One of several semi-automated gate stations around the terminal; Instagram by

[Image: One of several semi-automated gate stations around the terminal; Instagram by  [Image: Procession of the True Cross (1496) by Gentile Bellini, via

[Image: Procession of the True Cross (1496) by Gentile Bellini, via  [Image: The Container Guide; Instagram by

[Image: The Container Guide; Instagram by

[Image: The Grateful Dead “wall of sound,” via

[Image: The Grateful Dead “wall of sound,” via

[Image: From “

[Image: From “ [Image: From “

[Image: From “ [Image: From “

[Image: From “

[Images: From “

[Images: From “ [Image: A “plastiglomerate”—part plastic, part geology—photographed by

[Image: A “plastiglomerate”—part plastic, part geology—photographed by  [Image: From “

[Image: From “

[Image: The GPS contraption; photo via

[Image: The GPS contraption; photo via  [Image: Roomba-based LED art, via

[Image: Roomba-based LED art, via

[Image: Screen grab via

[Image: Screen grab via

[Image: Screen grabs via

[Image: Screen grabs via

[Image: Screen grabs via

[Image: Screen grabs via

[Image: Screen grabs via

[Image: Screen grabs via

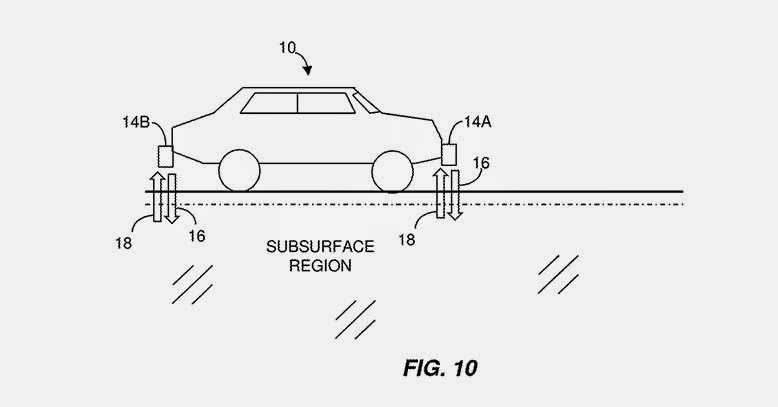

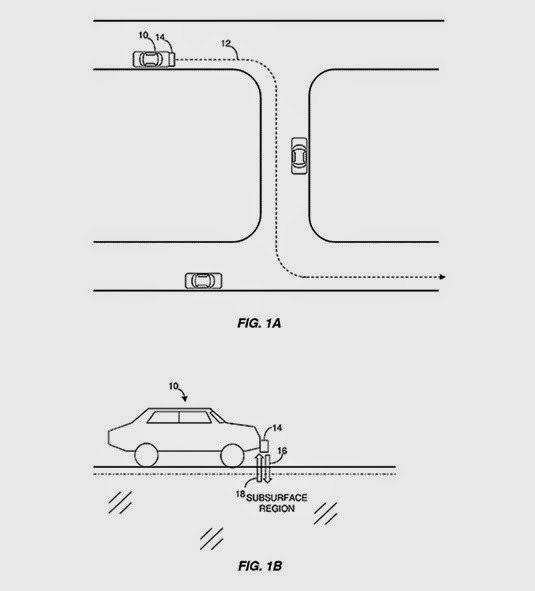

[Image: From a patent filed by MIT, courtesy

[Image: From a patent filed by MIT, courtesy  [Image: From a patent filed by MIT, courtesy

[Image: From a patent filed by MIT, courtesy  [Image: From a patent filed by MIT, courtesy

[Image: From a patent filed by MIT, courtesy

[Image: A perspectival representation of the “ideal city,” artist unknown].

[Image: A perspectival representation of the “ideal city,” artist unknown]. [Image: Jeff Bezos as perspectival historian. Courtesy of

[Image: Jeff Bezos as perspectival historian. Courtesy of  [Image: Courtesy of

[Image: Courtesy of  [Image: One of several perspectival objects—contraptions for producing spatially accurate drawings—by Albrecht Dürer].

[Image: One of several perspectival objects—contraptions for producing spatially accurate drawings—by Albrecht Dürer]. [Image: Another “ideal city,” artist unknown].

[Image: Another “ideal city,” artist unknown].

[Image: From Etienne-Gaspard Robertson’s 1834 study of technical phantasmagoria, via

[Image: From Etienne-Gaspard Robertson’s 1834 study of technical phantasmagoria, via  [Image: A “moving face” transmitted by John Logie Baird at a public demonstration of TV in 1926 (photo

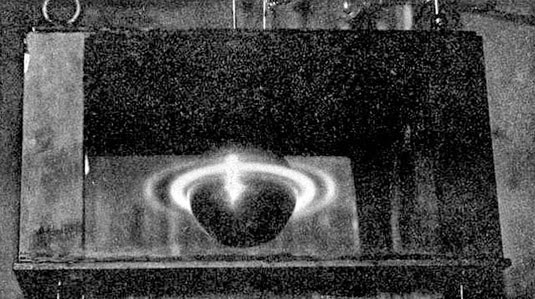

[Image: A “moving face” transmitted by John Logie Baird at a public demonstration of TV in 1926 (photo  [Image: Kristian Birkeland stares deeply into his universal simulator (

[Image: Kristian Birkeland stares deeply into his universal simulator ( [Image: From Birkeland’s The Norwegian Aurora Polaris Expedition 1902-1903, Vol. 1: On the Cause of Magnetic Storms and The Origin of Terrestrial Magnetism (

[Image: From Birkeland’s The Norwegian Aurora Polaris Expedition 1902-1903, Vol. 1: On the Cause of Magnetic Storms and The Origin of Terrestrial Magnetism ( [Image: Cropping in on the pic seen above (

[Image: Cropping in on the pic seen above (