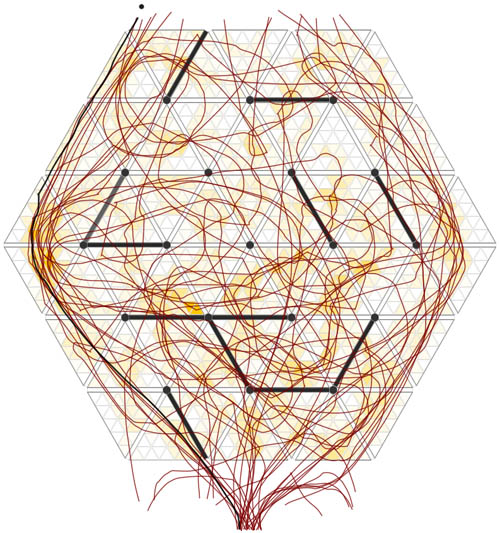

[Image: From “Putting the Fish in the Fish Tank: Immersive VR for Animal Behavior Experiments” by Sachit Butail, Amanda Chicoli, and Derek A. Paley].

[Image: From “Putting the Fish in the Fish Tank: Immersive VR for Animal Behavior Experiments” by Sachit Butail, Amanda Chicoli, and Derek A. Paley].

I’ve had this story bookmarked for the past four years, and a tweet this morning finally gave me an excuse to write about it.

Back in 2012, we read, researchers at Harvard University found a way to fool a paralyzed fish into thinking it was navigating a virtual spatial environment. They then studied its brain during this trip that went nowhere—this virtual, unmoving navigation—in order to understand the “neuronal dynamics” of spatial perception.

As Noah Gray wrote at the time, deliberately highlighting the study’s unnerving surreality, “Paralyzed fish navigates virtual environment while we watch its brain.” Gray then compared it to The Matrix.

The paper itself explains that, when “paralyzed animals interact fictively with a virtual environment,” it results in what are called “fictive swims.”

To study motor adaptation, we used a closed-loop paradigm and simulated a one-dimensional environment in which the fish is swept backwards by a virtual water flow, a motion that the fish was able to compensate for by swimming forwards, as in the optomotor response. In the fictive virtual-reality setup, this corresponds to a whole-field visual stimulus that is moving forwards but that can be momentarily accelerated backwards by a fictive swim of the fish, so that the fish can stabilize its virtual location over time. Remarkably, paralyzed larval zebrafish behaved readily in this closed-loop paradigm, showing similar behavior to freely swimming fish that are exposed to whole-field motion, and were not noticeably compromised by the absence of vestibular, proprioceptive and somatosensory feedback that accompanies unrestrained swimming.

Imagine being that fish; imagine realizing that the spatial environment you think you’re moving through is actually some sort of induced landscape put there purely for the sake of studying your neural reaction to it.

Ten years from now, experimental architecture-induction labs pop up at universities around the world, where people sit, strapped into odd-looking chairs, appearing to be asleep. They are navigating labyrinths, a scientist whispers to you, trying not to disturb them. You look around the room and see books full of mazes spread across a table, six-foot-tall full-color holograms of the human brain, and dozens of HD computer screens flashing with graphs of neural stimulation. They are walking through invisible buildings, she says.

[Image: From “Putting the Fish in the Fish Tank: Immersive VR for Animal Behavior Experiments” by Sachit Butail, Amanda Chicoli, and Derek A. Paley].

[Image: From “Putting the Fish in the Fish Tank: Immersive VR for Animal Behavior Experiments” by Sachit Butail, Amanda Chicoli, and Derek A. Paley].

In any case, the fish-in-virtual-reality setup was apparently something of a trend in 2012, because there was also a paper published that year called “Putting the Fish in the Fish Tank: Immersive VR for Animal Behavior Experiments,” this time by researchers at the University of Maryland. Their goal was to “startle” fish using virtual reality:

We describe a virtual-reality framework for investigating startle-response behavior in fish. Using real-time three dimensional tracking, we generate looming stimuli at a specific location on a computer screen, such that the shape and size of the looming stimuli change according to the fish’s perspective and location in the tank.

As they point out, virtual reality can be a fantastic tool for studying spatial perception. VR, they write, “provides a novel opportunity for high-output biological data collection and allows for the manipulation of sensory feedback. Virtual reality paradigms have been harnessed as an experimental tool to study spatial navigation and memory in rats, flight control in flies and balance studies in humans.”

But why stop at fish? Why stop at fish tanks? Why not whole virtual landscapes and ecosystems?

Imagine a lost bear running around a forest somewhere, slipping between birch trees and wildflowers, the sun a blinding light that stabs down through branches in disorienting flares. There are jagged rocks and dew-covered moss everywhere. But it’s not a forest. The bear looks around. There are no other animals, and there haven’t been for days. Perhaps not for years. It can’t remember. It can’t remember how it got there. It can’t remember where to go.

It’s actually stuck in a kind of ursine Truman Show: an induced forest of virtual spatial stimuli. And the bear isn’t running at all; it’s strapped down inside an MRI machine in Baltimore. Its brain is being watched—as its brain watches the well-rendered polygons of these artificial rocks and trees.

(Fish tank story spotted via Clive Thompson. Vaguely related: The Subterranean Machine Dreams of a Paralyzed Youth in Los Angeles).

[Image: From “Labyrinths, Mazes and the Spaces Inbetween” by Sam McElhinney].

[Image: From “Labyrinths, Mazes and the Spaces Inbetween” by Sam McElhinney]. [Images: Movement-typologies from “Labyrinths, Mazes and the Spaces Inbetween” by Sam McElhinney].

[Images: Movement-typologies from “Labyrinths, Mazes and the Spaces Inbetween” by Sam McElhinney]. [Images: From “Labyrinths, Mazes and the Spaces Inbetween” by Sam McElhinney].

[Images: From “Labyrinths, Mazes and the Spaces Inbetween” by Sam McElhinney].

[Images: From “Labyrinths, Mazes and the Spaces Inbetween” by Sam McElhinney].

[Images: From “Labyrinths, Mazes and the Spaces Inbetween” by Sam McElhinney]. [Image: Sam McElhinney’s “switching labyrinth,” or psycho-cybernetic human navigational testing ground, constructed near Euston Station].

[Image: Sam McElhinney’s “switching labyrinth,” or psycho-cybernetic human navigational testing ground, constructed near Euston Station].

[Images: Sam McElhinney’s “switching labyrinth”].

[Images: Sam McElhinney’s “switching labyrinth”]. [Image: From “Labyrinths, Mazes and the Spaces Inbetween” by Sam McElhinney].

[Image: From “Labyrinths, Mazes and the Spaces Inbetween” by Sam McElhinney]. [Image: From “Labyrinths, Mazes and the Spaces Inbetween” by Sam McElhinney].

[Image: From “Labyrinths, Mazes and the Spaces Inbetween” by Sam McElhinney].

[Images: Maze-studies from “Labyrinths, Mazes and the Spaces Inbetween” by Sam McElhinney].

[Images: Maze-studies from “Labyrinths, Mazes and the Spaces Inbetween” by Sam McElhinney].

[Image: A glimpse of

[Image: A glimpse of  [Image: A squadron of drones awaits its orders].

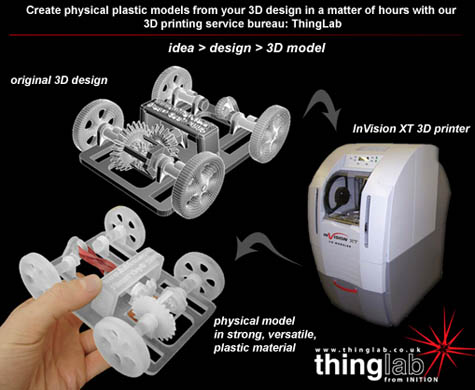

[Image: A squadron of drones awaits its orders]. [Image: 3D printing, via

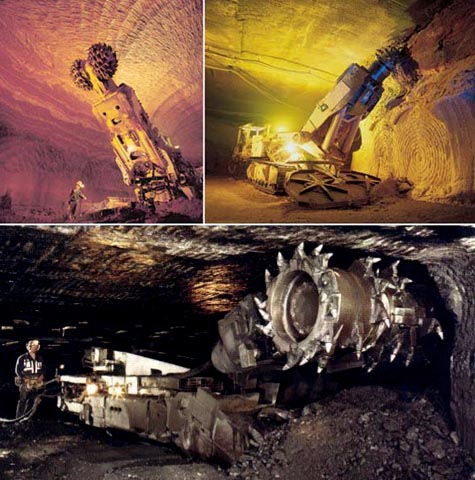

[Image: 3D printing, via  [Image: Three varieties of underground mining machine].

[Image: Three varieties of underground mining machine]. [Image: The gardens at Versailles, via

[Image: The gardens at Versailles, via